University of California, San Diego

University of California, San DiegoI am a second-year graduate student at UC San Diego working with Loris D'Antoni. My current research focuses on constrained generation for language models and their applications to compiler testing.

Previously, I worked on low-latency trading systems at J.P. Morgan and Morgan Stanley.

Education

-

University of California, San DiegoM.S. in Computer Science

University of California, San DiegoM.S. in Computer Science

Advisor: Loris D'AntoniSep. 2024 - Jun. 2026 (Expected) -

P.S.G. College of TechnologyB.E. in Computer Science and Engineering

P.S.G. College of TechnologyB.E. in Computer Science and Engineering

Advisor: G. R. KarpagamJun. 2019 - May 2023

Experience

-

Morgan StanleyAssociateJul. 2023 - Aug. 2024

Morgan StanleyAssociateJul. 2023 - Aug. 2024 -

Morgan StanleySoftware Engineer InternJan. 2023 - Jun. 2023

Morgan StanleySoftware Engineer InternJan. 2023 - Jun. 2023 -

J.P. Morgan Chase & Co.Software Engineer InternJun. 2022 - Jul. 2022

J.P. Morgan Chase & Co.Software Engineer InternJun. 2022 - Jul. 2022

News

Selected Publications (view all )

Bootstrapping Fuzzers for Compilers of Low-Resource Language Dialects Using Language Models

Sairam Vaidya, Marcel Böhme, Loris D'Antoni

arXiv Preprint 2025

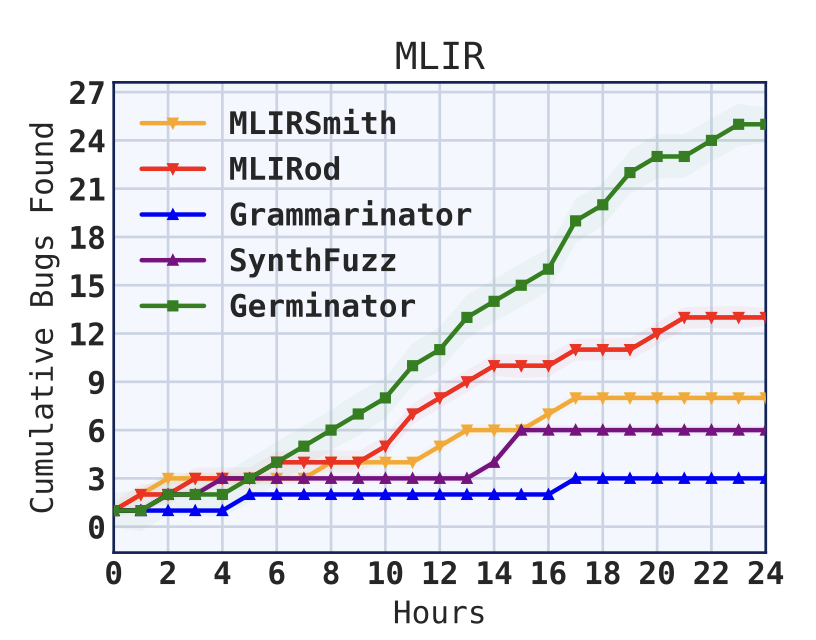

We present Germinator, a dialect-agnostic and dialect-effective fuzzing approach for extensible compilers like MLIR. By automatically extracting grammars from dialect specifications and using LLMs to generate diverse seed inputs, Germinator bootstraps coverage-guided fuzzing without manual effort. Evaluated on six MLIR projects spanning 91 dialects, it improved line coverage by 10-120% and discovered 88 previously unknown bugs.

Bootstrapping Fuzzers for Compilers of Low-Resource Language Dialects Using Language Models

Sairam Vaidya, Marcel Böhme, Loris D'Antoni

arXiv Preprint 2025

We present Germinator, a dialect-agnostic and dialect-effective fuzzing approach for extensible compilers like MLIR. By automatically extracting grammars from dialect specifications and using LLMs to generate diverse seed inputs, Germinator bootstraps coverage-guided fuzzing without manual effort. Evaluated on six MLIR projects spanning 91 dialects, it improved line coverage by 10-120% and discovered 88 previously unknown bugs.

Constrained Sampling for Language Models Should Be Easy: An MCMC Perspective

Emmanuel Anaya Gonzalez*, Sairam Vaidya*, Kanghee Park, Ruyi Ji, Taylor Berg-Kirkpatrick, Loris D'Antoni (* equal contribution)

NeurIPS 2025

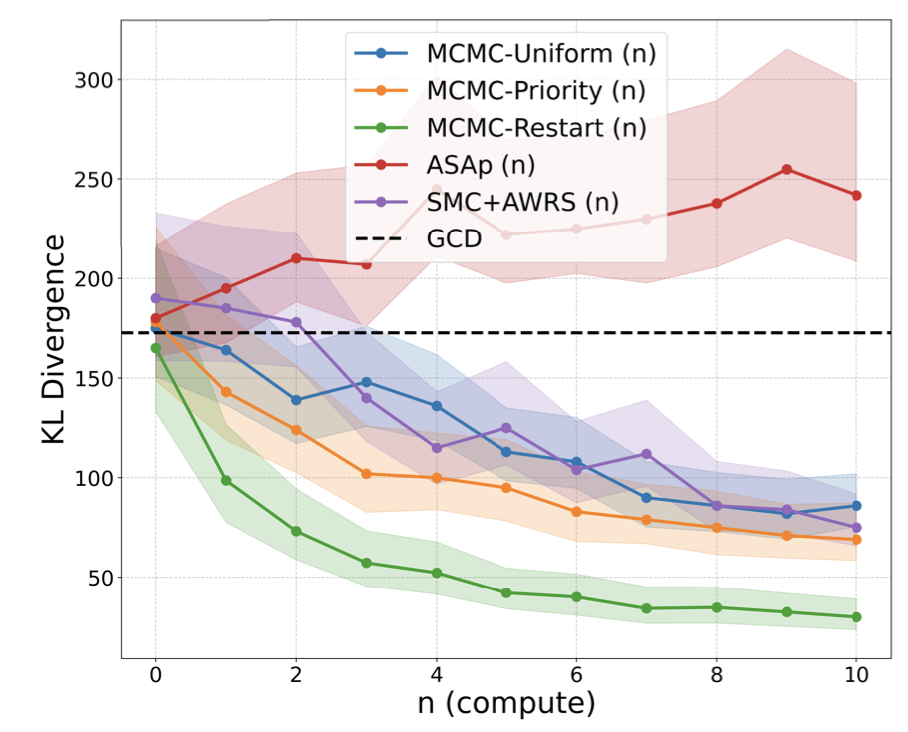

We propose a new constrained sampling framework based on Markov Chain Monte Carlo (MCMC) that is constraint satisfying, monotonically converging to the true conditional distribution, and efficient at generating high-quality samples in few steps.

Constrained Sampling for Language Models Should Be Easy: An MCMC Perspective

Emmanuel Anaya Gonzalez*, Sairam Vaidya*, Kanghee Park, Ruyi Ji, Taylor Berg-Kirkpatrick, Loris D'Antoni (* equal contribution)

NeurIPS 2025

We propose a new constrained sampling framework based on Markov Chain Monte Carlo (MCMC) that is constraint satisfying, monotonically converging to the true conditional distribution, and efficient at generating high-quality samples in few steps.